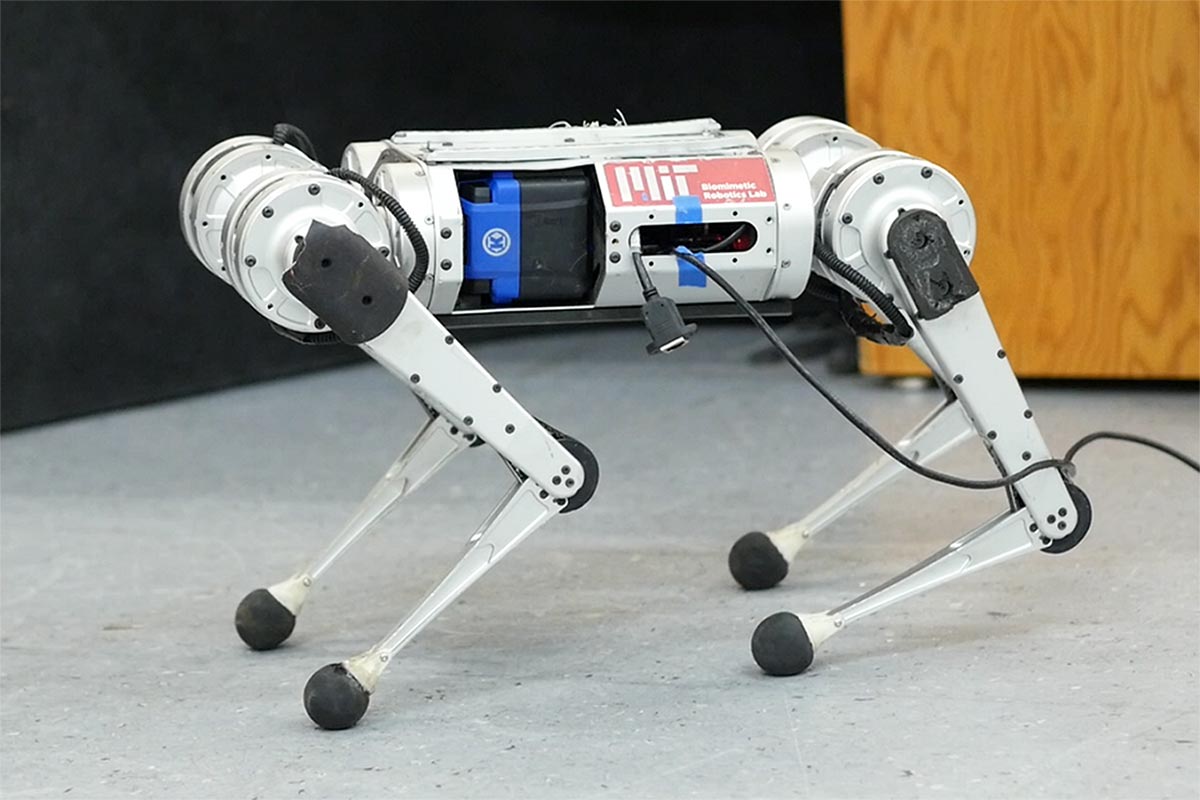

MIT’s mini-cheetah, which uses a model-free reinforcement learning system, broke the record for fastest recorded run. Photo Credit: Photo courtesy of MIT CSAIL.

CSAIL scientists developed a learning pipeline for the four-legged robot, which learns to walk entirely through trial and error in simulation.

It’s been around 23 years since one of the first robotic animals emerged and defied traditional notions of our cuddly four-legged friends. Since then, a barrage of walking, dancing, and door-opening machines has commanded their presence, an elegant blend of batteries, sensors, metal, and motors. Missing from the list of cardio activities was one that humans both loved and loathed (depending on who you ask) and that proved a little harder for the bots: learning to walk.

researchers out[{” attribute=””>MIT’s Improbable AI Lab, part of the Computer Science and Artificial Intelligence Laboratory (CSAIL) and directed by MIT Assistant Professor Pulkit Agrawal, as well as the Institute of AI and Fundamental Interactions (IAIFI) have been working on fast-paced strides for a robotic mini cheetah — and their model-free reinforcement learning system broke the record for the fastest run recorded. Here, MIT PhD student Gabriel Margolis and IAIFI postdoc Ge Yang discuss just how fast the cheetah can run.

Q: We’ve seen videos of robots running before. Why is running harder than walking?

A: Achieving fast running requires pushing the hardware to its limits, for example by operating near the maximum torque output of motors. In such conditions, the robot dynamics are hard to analytically model. The robot needs to respond quickly to changes in the environment, such as the moment it encounters ice while running on grass. If the robot is walking, it is moving slowly and the presence of snow is not typically an issue. Imagine if you were walking slowly, but carefully: you can traverse almost any terrain. Today’s robots face an analogous problem. The problem is that moving on all terrains as if you were walking on ice is very inefficient, but is common among today’s robots. Humans run fast on grass and slow down on ice — we adapt. Giving robots a similar capability to adapt requires quick identification of terrain changes and quickly adapting to prevent the robot from falling over. In summary, because it’s impractical to build analytical (human-designed) models of all possible terrains in advance, and the robot’s dynamics become more complex at high-velocities, high-speed running is more challenging than walking.

The MIT Mini Cheetah learns to run faster than ever using a learning pipeline that is entirely trial and error in the simulation.

Q: Previous agile locomotion controllers for the MIT Cheetah 3 and Mini Cheetah, as well as for Boston Dynamics robots, are “analytically designed” and rely on human engineers to analyze the physics of locomotion, formulate efficient abstractions, and implement a specialized hierarchy of controllers to balance and run the robot. They use an “experiential learning model” to execute rather than program it. Why?

A: It’s just very difficult to program how a robot should behave in every possible situation. The process is tedious because if a robot were to fail on a certain terrain, a human engineer would have to identify the cause of the failure and manually adjust the robot controls, and this process can take a lot of human time. Learning through trial and error eliminates the need for a human to dictate exactly how the robot should behave in each situation. This would work if: (1) the robot can experience an extremely wide range of terrain; and (2) the robot can automatically improve its behavior with experience.

Thanks to modern simulation tools, our robot can gain 100 days of experience on different terrains in just three hours of actual time. We have developed an approach that improves the behavior of the robot through simulated experience, and crucially, our approach also enables the successful use of these learned behaviors in the real world. The intuition behind why the robot’s walking skills work well in the real world is: Of all the environments he sees in this simulator, some will teach the robot skills that are useful in the real world. When operating in the real world, our controller identifies and executes the relevant capabilities in real-time.

Q: Can this approach be scaled beyond the mini cheetah? What excites you about its future applications?

A: At the heart of artificial intelligence research is the trade-off between what humans have to build in (nature) and what the machine can learn on its own (care). The traditional paradigm in robotics is that humans tell the robot what task to do and how to do it. The problem is that such a framework is not scalable as it would require immense human engineering effort to manually program a robot with the abilities to work in many different environments. A more practical way to build a robot with many different abilities is to tell the robot what to do and let it figure out how. Our system is an example of this. In our lab, we’ve started applying this paradigm to other robotic systems, including hands that can pick up and manipulate many different objects.

This work was supported by the DARPA Machine Common Sense Program, the MIT Biomimetic Robotics Lab, NAVER LABS and in part the National Science Foundation AI Institute for Artificial Intelligence Fundamental Interactions, United States Air Force-MIT AI Accelerator and MIT-IBM Watson AI Lab. The research was conducted by the Improbable AI Lab.